I’m dubious of ‘hot takes’ of what just happened, and that what it means for the future – and there were loads of these hot takes going around after the US elections back in November.

But now a few months have passed, I’ve found myself coming back to a few pieces written by smarter people than me on what lessons there might be for those of us interested in progressive politics here in the UK.

It all comes with the caveat that the US is very different politically from the UK – and we’re not due another General Election for a few years, and that we should be spending way more energy learning from other comparative democracies closer to home – on that I’d recommend a read of this from the More in Common team based on the French elections back in the summer.

But I’ve found myself reflecting that we do need to at least take a moment to reflect on the lessons – both from what the rise of far-right populism that is emerging across the developed world, and also what it tells us about how the electorate is engaged in politics.

- Your experience of the economy is not my experience of the economy – I found this by Andrew Pendleton an excellent read how people experience the economy, and what it means for politics. There is lots of talk about growth, and how GDP is increasing, but that as Andrew writes that’s not most people’s experience of the economy, and ‘the problem of stagnant average living standards, coupled with failing public services, along with high levels of inequality…is a potent mix”. The adage that it’s all about the economy is true, but how you experience the economy is often very from others.

- Go to where people are and connect with them on a hyperlocal level – Campaign Lab, led by Hannah O’Rourke, has some practical lessons for progressive campaigners, bringing together learning from their experiences of working on the Labour election victory and reflections from the US. One stands out, about the need at a time when trust in national politics is collapsing to “root their efforts in local soil. The local level provides that crucial bridge between people, politics, and place – it’s where abstract policies become tangible and mean something to people” and a challenge that progressive activists ‘waste too much money waiting for people to come to them, instead of going to where people are already organising and talking” (something that was very much echoed by Hahrie Han during her recent book tour in the UK)

- Campaigns are fought on terrain set by culture – I really appreciated this piece on Semafor (h/t Paul at Rally) with lessons from those involved in the Harris digital campaign, and the role that culture plays in delivering a message ‘“Campaigns, in many ways, are last-mile marketers that exist on terrain that is set by culture, and the institutions by which Democrats have historically had the ability to influence culture are losing relevance’ and the challenge not to just focus on the spaces that – unless ‘the race was going to come down to voters who do not pay attention to politics or mainstream news and instead get their information from people on YouTube, their friends’ Instagram stories, or links or memes dropped in a group chat’. Two lessons that instinctively make sense when written out but ones I see many campaigners overlook.

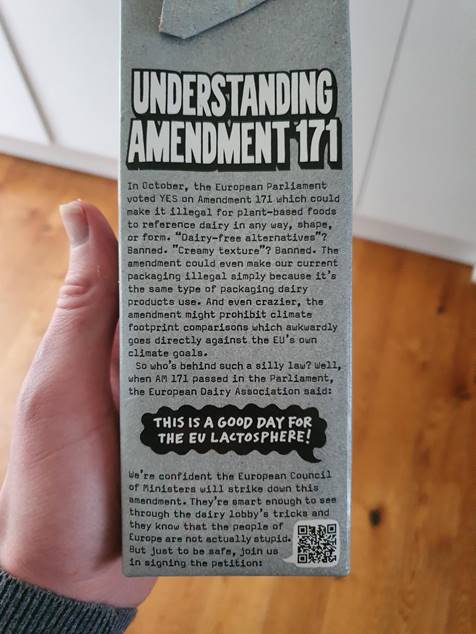

4. How we communicate is changing – and quickly – a colleagues passed the short piece above on so I don’t know the original source, but I liked how it explored how strategic comms approaches are changing with the key principles now being ‘volume, iterate rather than perfect, move fast and apologise rather than ask permission, and build up a community (“cult”) of people who are really passionate about your cause and talk directly to them rather than through intermediaries / gatekeepers’. You might not agree with everything in it, but one to be aware when thinking about how other brands, organisations and campaigns will be approaching communications.